DevOps Engineers: An overview of roles and responsibilities

Many tech giants have integrated the DevOps approach into their software development teams. Let’s discuss the role of the DevOps Engineer.

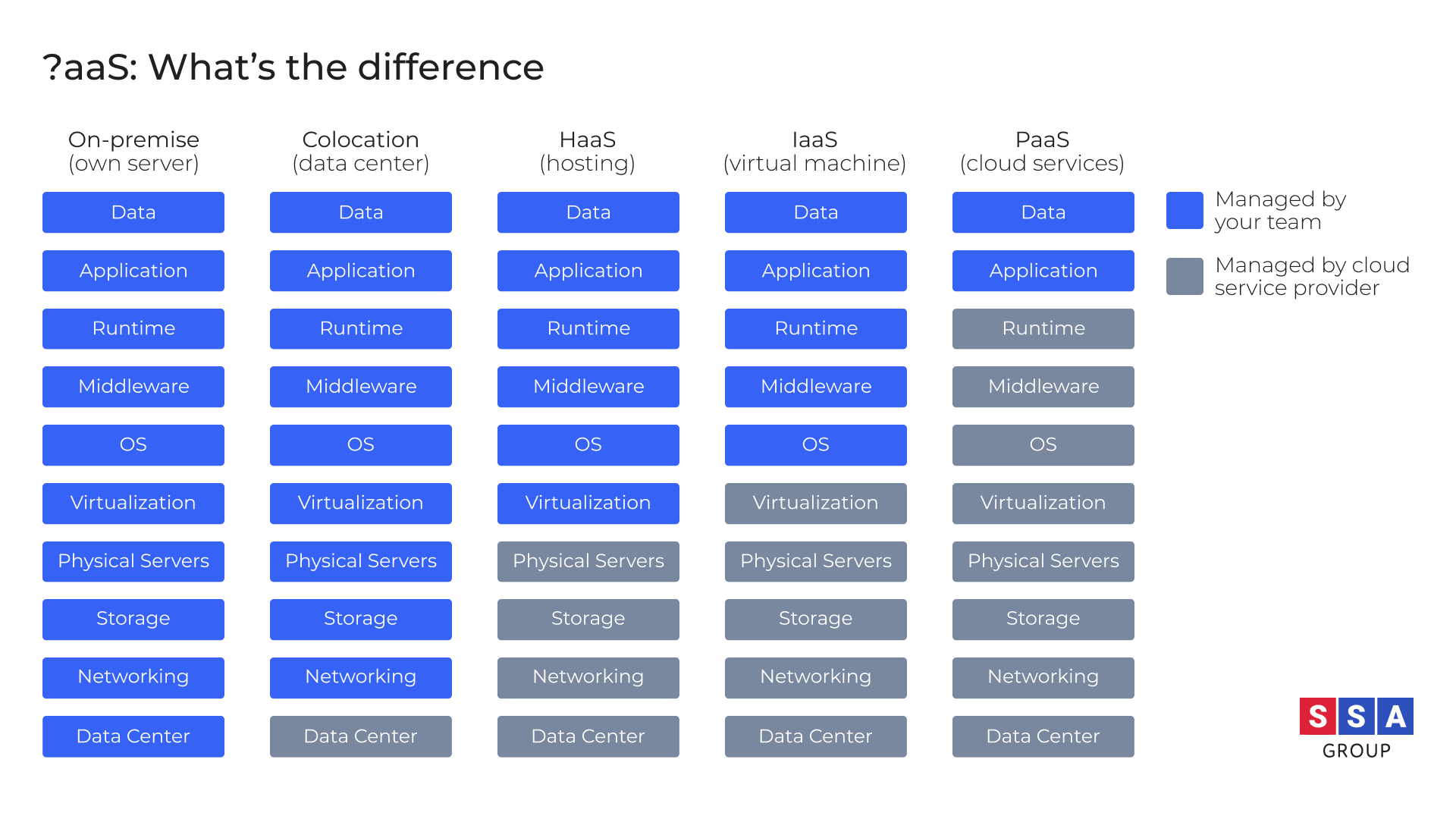

Several different models and deployment strategies have been emerged to meet the specific needs of different business types. Each model provides different levels of control, flexibility, and management for a company’s IT infrastructure. Nowadays, three main options are available for project deployment:

In addition, colocation and hosting services are also available for businesses. These deployment options differ in terms of the set of operations that are managed by the service provider and by the client’s IT operations team.

Below we examine the primary software deployment strategies and highlight their pros and cons for different business types.

This is a computer specifically designed as a single-tenant environment to host software products. The most significant benefits of bare metal servers are stable and predictable performance, reliability and free direct access to the physical hardware, which offers more options when creating your own platform for hosting an application. Each component is customisable; therefore it is possible to build either something of a powerhouse or an inexpensive and low-powered solution.

Many experts note that the days of using bare metal servers have passed. However, there are still industries that traditionally rely on dedicated hosting solutions and colocation; for instance, the banking and financial services industry, healthcare and government. These industries require an extremely high level of performance, power and data security, and in this case a dedicated server is the only option.

For government applications, data security is an issue of primary importance. Hosting solutions must comply with a great number of national regulatory regimes, be accessible after passing a strict screening process, and be operated from within the national territories. This cannot realistically be achieved by facilities not on-premise.

Bare metal is perfect for business intelligence or data-crunching applications with extremely heavy workloads. Large enterprises often rely on dedicated servers if they deal with big data. Owing to direct access to hardware, they can process more data than any other solution. They offer lower latency and CPU utilisation that enables faster result times to be provided along with greater data output.

Performance speed is also crucial for e-commerce businesses, where every millisecond matters. A dedicated server allows businesses to be certain that 100% of a server’s resources are at its disposal.

The most significant disadvantages of bare metal servers are the considerable upfront investment in buying machines, licensing software and hiring a TechOps team. In addition, dedicated infrastructure lacks flexibility and scalability. To scale bare metal resources means buying and deploying new servers, and this is not cost-effective.

Over the past few years, the number of cloud-based services has dramatically increased. According to the State of DevOps 2019 survey, 80% of companies use cloud platforms to host their applications or services. Experts predict that this will increase on a year-by-year basis. On average, companies expect to allocate about a third (32%) of their total IT budget to cloud computing in the next year.

The primary reason why more and more companies are shifting to cloud computing is the desire to simplify their in-house operations. The greatest benefit of cloud technologies for businesses is that there is no need to have in-house technical experts to hand over the infrastructure. Various cloud management platforms facilitate the administration of cloud resources.

Cloud solution providers offer various services and models to deploy software products. The primary models are:

Some cloud solution providers offer all the above-mentioned services, whereas others focus on a particular model. Let’s take Google’s offerings as an example:

service providers can deliver cloud computing services via two main methods: public or private cloud servers.

A public cloud contains multiple servers, connected to a central server. The connection to a public cloud is free via the internet, using a shared resource pool. A public cloud solution provider takes over the maintenance of cloud infrastructure, allowing clients to focus on core business aspects.

Cloud technologies are cost-effective hosting solutions that can be easily automated and scaled in the future. Public cloud is a good option for small and medium businesses, as it doesn’t require any significant upfront costs; the client only pays for the units consumed and for the time used. There is an opportunity to scale up virtual resources any time, as well as scale down when usage is low. However, to achieve the cost optimisation goal, businesses need to have an in-house CloudOps Engineer with strong expertise in public cloud management tools.

As infrastructure is delivered pre-configured, the implementation is easier and faster than on bare metal servers. However, there is limited control of the underlying infrastructure and no opportunity to choose what servers to use, what software to install or how to set up the architecture.

The primary weak spot of public clouds is security. Having data and applications spread across several servers, data centres or even several countries, public cloud services often fail to meet the demands of industries that must comply with strict regulations such as HIPAA, PCI, FedRAMP, etc.

Another disadvantage of public clouds is performance. As they allow multiple users to share the same resource, the performance level is significantly reduced compared to the dedicated server.

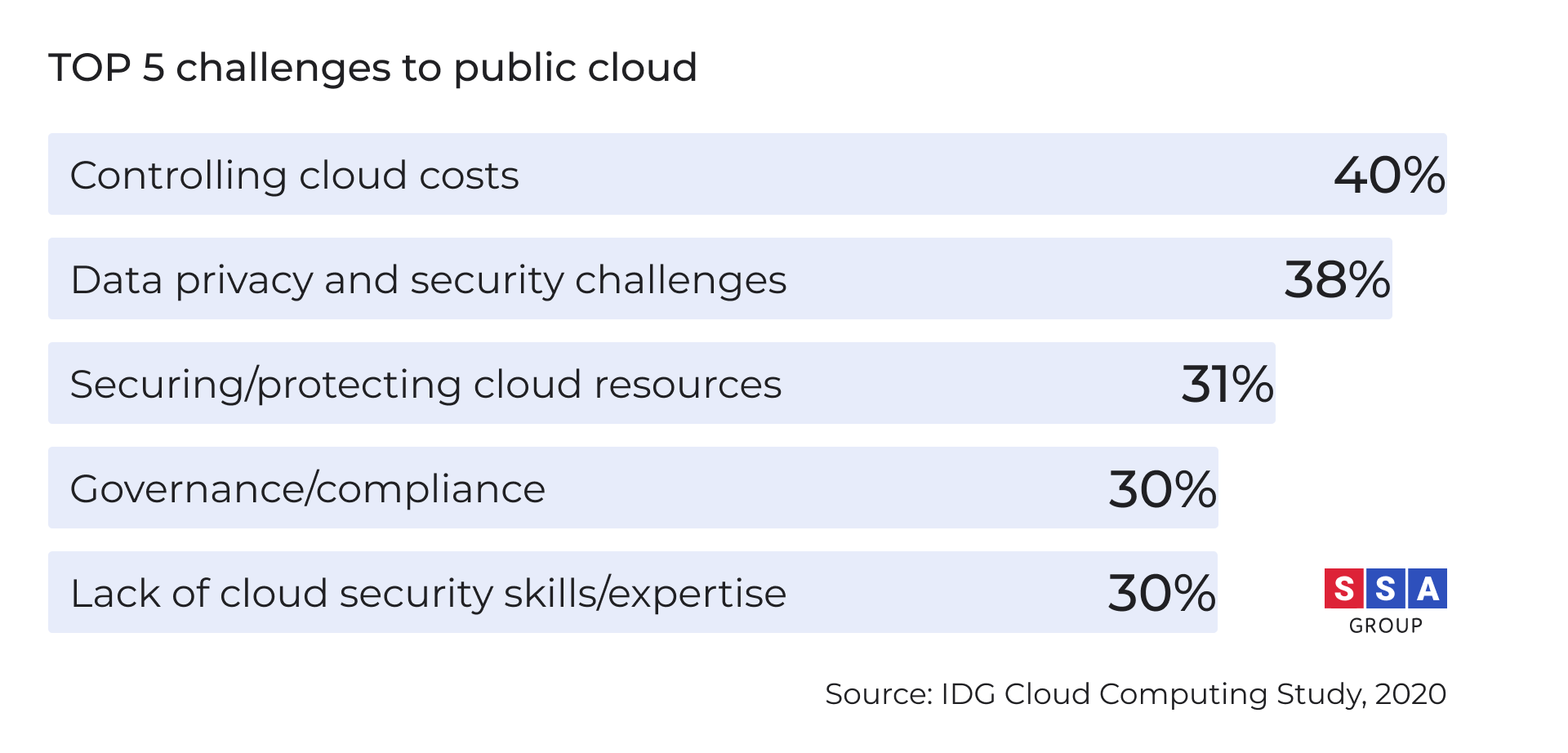

According to the IDG Cloud Computing Survey, the most significant challenges for companies in using public cloud resources are as follows:

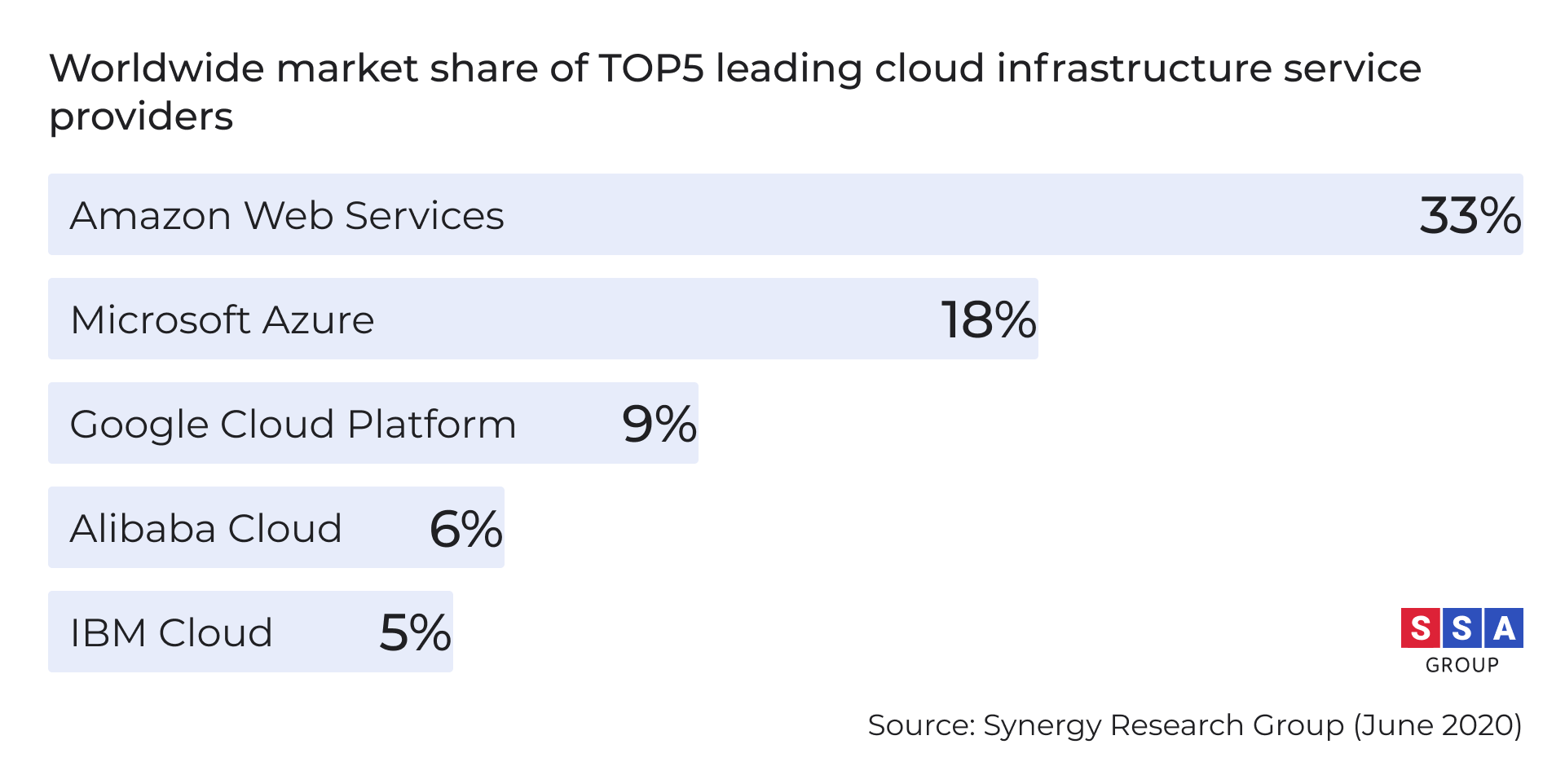

There are three public cloud services providers with the most significant market share: Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform. In the second quarter of 2020, Amazon’s market share in the worldwide cloud infrastructure market amounted to 33%. TOP3 market leaders offer a wide range of cloud-related products and services with a flexible pay-as-you-go pricing model suitable for the budgets of all types of businesses.

Instead of competing against public cloud giants, some providers focus on offering private cloud services for enterprises and companies representing industries that cannot deal with the public clouds. For instance, IBM acquired Red Hat, a company that provides open-source software products to enterprises, including operating system platforms, middleware and virtualisation products based on OpenStack technology.

Equally, Dell Technologies, Cisco Systems, and Hewlett Packard Enterprise (HPE) offer flexibility in choosing the cloud computing model, supporting multi-cloud software deployment strategies that are desirable for most enterprises.

A private cloud is a single-tenant cloud environment traditionally run on-premise or on rented virtual server resources. The IT infrastructure is dedicated to a single customer, with a firewall that protects from unauthorized access, and this is the main difference between the private and public cloud. For security, access to private cloud applications can be received only through closed Virtual Private Networks (VPNs) or through the client organisation’s intranet.

This is a preferable solution for companies that deal with strict data processing and security requirements due to high volumes of sensitive information. Enterprises, healthcare providers, financial entities and government companies often choose a private cloud as a more secure, compliant and scalable option than public clouds. Dedicated clouds give businesses full control over the virtual machines, data and configurations, and unlike public clouds, a dedicated private cloud guarantees high performance.

Private clouds are built on bare metal servers by means of virtualisation and adding an extra layer to manage the IT infrastructure, platforms, applications and data. The primary technology for virtualisation is OpenStack. Another method is to use IaaS services. The leading IaaS providers are Red Hat, VMware, SAP HANA, Dell Cloud and Cisco Systems.

As for the cost, building a dedicated private cloud can be significantly more expensive than hosting in the public one, but it is undoubtedly worth investing in considering all the risks involved with the public cloud offering.

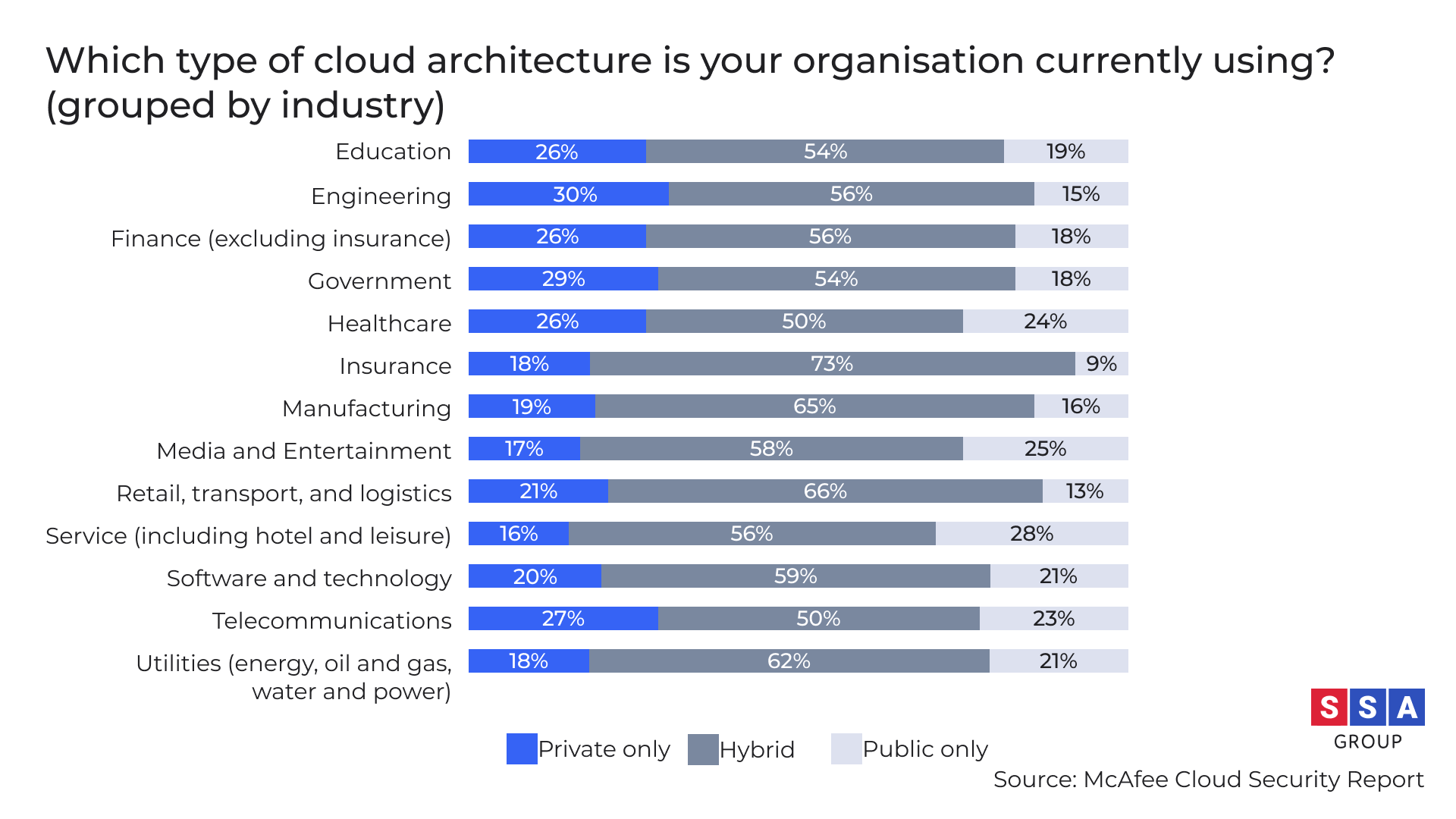

Hybrid strategies have become mainstream, allowing companies to gain the benefits of an on-premise environment with public and/or private cloud environments. Owing to its flexibility, companies can build their own unique hybrid infrastructure depending on business needs and budget. The mix of private and public clouds can ensure a high level of performance, system reliability and great scalability if necessary, and this is more cost-effective compared to the traditional bare metal solution or private cloud.

As for security issues, hybrid clouds can be as reliable as a traditional bare metal solution. Companies can use private or on-premise servers for storing critical data, whereas less sensitive workloads can be run in public clouds. Enterprises and companies representing such industries such as banking and financial services, healthcare or government often choose hybrid strategies for the deployment of their software products. According to IDC predictions, over 90% of enterprises worldwide will be using a hybrid cloud infrastructure by 2021.

Another option for businesses is the multi-cloud model, which involves using the services of two or more public cloud computing vendors at once. This strategy lowers the risk of cloud provider lock-in, offering more agility and flexibility in cost optimisation.

It can be challenging when you need to manage several environments and provide data integration within a hybrid infrastructure. And it is impossible without having in-house DevOps Engineer and adopting the containerisation approach. It consists of encapsulating code units or whole applications with its dependencies so that it can run consistently on any infrastructure.

Containers allow the machine’s OS kernel to be shared, thus increasing server efficiency and reducing costs. The IDC predicts that 50% of enterprise applications will be deployed in a containerised hybrid cloud environment by 2023.

Cloud management platforms (CMP) have also appeared to simplify hybrid clouds management, its automation and orchestration. The list of cloud management platforms available on the market includes OpenStack, IBM Multicloud Manager, VMware Cloud Management Platform, Azure Arc, Red Hat CloudForms and so forth.

In addition, there are many multi-cloud solution providers which offer managed services in orchestrating hybrid infrastructure, e.g. Digital Ocean, Scaleway, Nexcess, Rackspace, etc. They provide additional support for OS, applications and routine services, and API with an online control panel to manage the infrastructure on an increased paid basis.

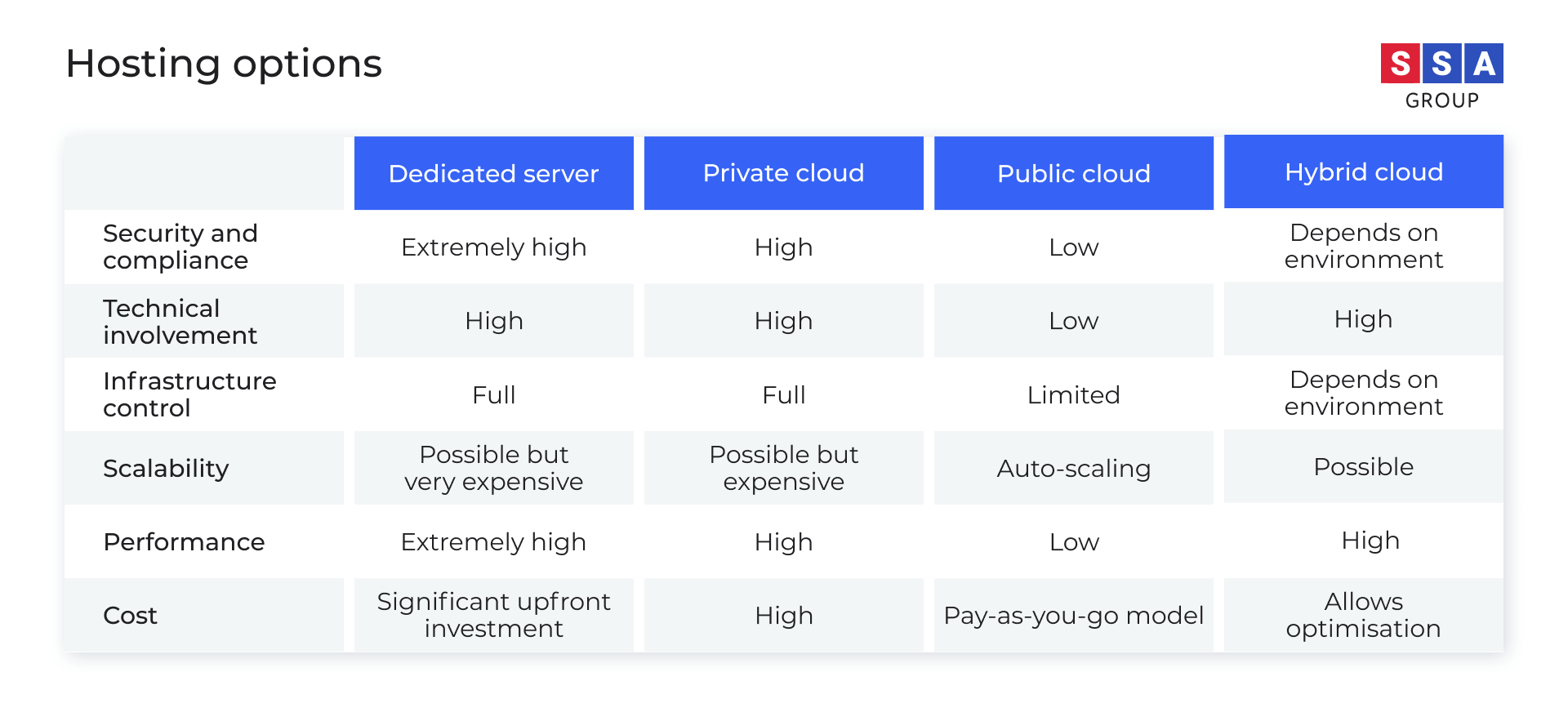

To summarise the primary pros and cons of each deployment option, we have compiled the following chart.

Find out more in our article DevOps Engineers: An overview of roles and responsibilities.

When choosing an effective deployment strategy, the business owner should consider all pros and cons and pay close attention to current business needs and opportunities for growth in the future. This requires a certain degree of maturity in the management of IT infrastructure. A common mistake made by organisations embarking on cloud adoption is believing that current on-premises practices are valid for cloud and hybrid models.

According to Gartner, mistakes in cloud adoption lead to an overspend of 20–50%. To mitigate risks and maximise cost savings, consider SSA Group as an experienced IT consultant with strong expertise in cloud solutions. Thank you for reading, and if you have any questions, we would be happy to hear from you.

Many tech giants have integrated the DevOps approach into their software development teams. Let’s discuss the role of the DevOps Engineer.

Recent trends demonstrate that many companies adopt the best quality assurance practices and widen the responsibility of test engineers. Find out why.

you're currently offline